The final presidential debate took place last Thursday October 22nd 2020 between Donald Trump and Joe Biden. The transcript for the debate can be found

here:

Using a web-scraping tool, I was able to access the digital transcript and isolate each candidates' speaking portions in order to run some exploratory text analysis. Disclaimer- this post will not include any political commentary. Rather, I am interested in uncovering any patterns that occurred in the transcript.

First off, how many times did each candidate speak?

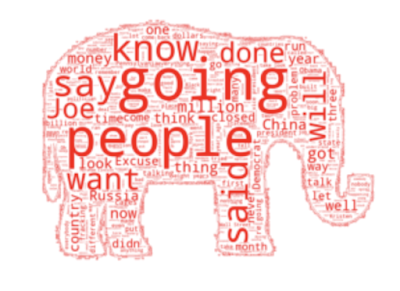

Donald Trump, despite the first ever introduction of a mute button during a presidenti, was credited for speaking 62 more times than his opponent. Next, what words were most frequently used by each candidate? With minimal processing, below are the results for the candidates:

Visualized a different way, we can clearly see some significant overlap between the two:

These top word breakdowns get a little more interesting when we add some of these frequently occurring top words to our 'stop' list (words that are removed from consideration due to not being informative). For example, if we remove 'going', 'said', and 'people' from the analysis, we get the updated top word breakdowns here:

I'll let the reader come to their own conclusions given these updated top words form each candidate. I also ran this type of text processing for the moderator, and her top word by a long shot was "President".

Another common text analysis technique is word count. Both overall total number of words and number of unique words:

The first concept that most people associate with natural language processing is sentiment analysis. Using the sentiment calculator in the TextBlob package, the sentiment of each candidate's responses as well as the calculated subjectivity score can be visualized below:

From these figures, it would appear that Joe Biden had a more uplifting message in his communications that evening. However, these sentiment scores should always be taken with a grain of salt because a "positive" sentiment in this sense (a political debate) may be much different than what is considered positive in the corpus on which this sentiment model was trained.

Now, with some exploratory data analysis done, I would like the build a classifier. This classifier's inputs would be a quote from the debate, and the output would be that model's prediction of who said that quote.

In other words,

Can we Build a Model that predicts which Candidate said a specific quote? To create this model, we will use a count vectorizer to build a data frame of word frequencies. I'll spare readers the details of how exactly this process works, unless you'd like to check out the code

here.

I used the count vectorizer technique to fit and assess 4 different models. Their respective accuracy scores are plotted below:

The accuracy performance of each of these 4 models were pretty comparable to each other ranging from 76% to 79%. However, these were pretty basic models, and if it is worth doing, it is worth overdoing! I then built a much more sophisticated random forest model with the intent of

1. Improving accuracy and

2. finding out which words were most important in deciding who said what.

With a relatively small sample size I was unable to drastically improve accuracy, but I was able to grab which words were most important in this beefed up model:

Most of these words may be circumstantial, and therefore not very informative if we use this model on more future text, but the one word that stuck out above the rest was "true". It appears that if the word 'true' appears in the quote, the model has a better idea of who said it. This, paired with Joe Biden's top word (after processing) being "fact" is pretty interesting! Thanks for reading.

Comments

Post a Comment